[ad_1]

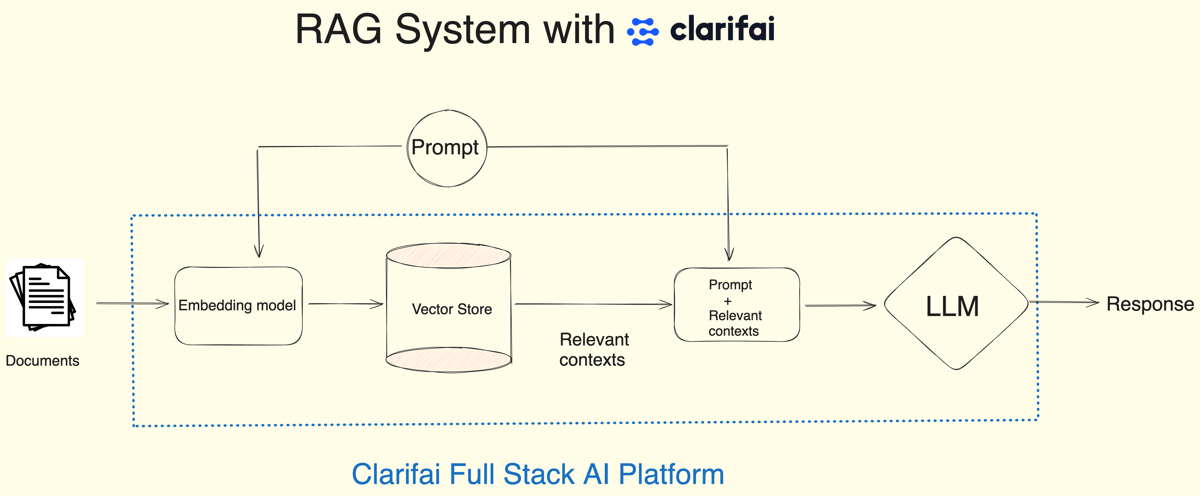

What’s Retrieval-Augmented Era?

Massive Language Fashions will not be up-to-date, and so they additionally lack domain-specific data, as they’re skilled for generalized duties and can’t be used to ask questions on your personal information.

That is the place Retrieval-Augmented Era (RAG) is available in: an structure that gives probably the most related and contextually necessary information to the LLMs when answering questions.

The three key elements for constructing a RAG system are:

- Embedding Fashions, which embed the info into vectors.

- Vector Database to retailer and retrieve these embeddings, and

- A Massive Language Mannequin, which takes the context from the vector database to reply.

Clarifai supplies all three in a single platform, seamlessly permitting you to construct RAG functions.

construct a Retrieval-Augmented Era system

As a part of our “AI in 5” sequence, the place we train you how one can create superb issues in simply 5 minutes, on this weblog, we are going to see how one can construct a RAG system in simply 4 traces of code utilizing Clarifai’s Python SDK.

Step 1: Set up Clarifai and set your Private Entry Token as an atmosphere variable

First, set up the Clarifai Python SDK with a pip command.

Now, you want to set your Clarifai Private Entry Token (PAT) as an atmosphere variable to entry the LLMs and vector retailer. To create a brand new Private Entry Token, Join for Clarifai or if you have already got an account, log in to the portal and go to the safety possibility within the settings. Create a brand new private entry token by offering a token description and choosing the scopes. Copy the Token and set it as an environmental variable.

After you have put in the Clarifai Python SDK and set your Private Entry Token as an atmosphere variable, you may see that each one you want are simply these 4 traces of code to construct a RAG system. Let us take a look at them!

Step 2: Arrange the RAG system by passing your Clarifai person ID

First, import the RAG class from Clarifai Python SDK. Now, arrange your RAG system by passing your Clarifai person ID.

You need to use the setup methodology and move the person ID. Since you’re already signed as much as the platform, you could find your person ID beneath the account possibility within the settings right here.

Now, when you move the person ID the setup methodology will create:

- A Clarifai app with “Textual content” as the bottom workflow. If you’re not conscious of apps, they’re the fundamental constructing blocks for creating initiatives on the Clarifai platform. Your information, annotations, fashions, predictions, and searches are contained inside functions. Apps act as your vector database. When you add the info to the Clarifai software, it would embed the info and index the embeddings based mostly in your base workflow. You possibly can then use these embeddings to question for similarity.

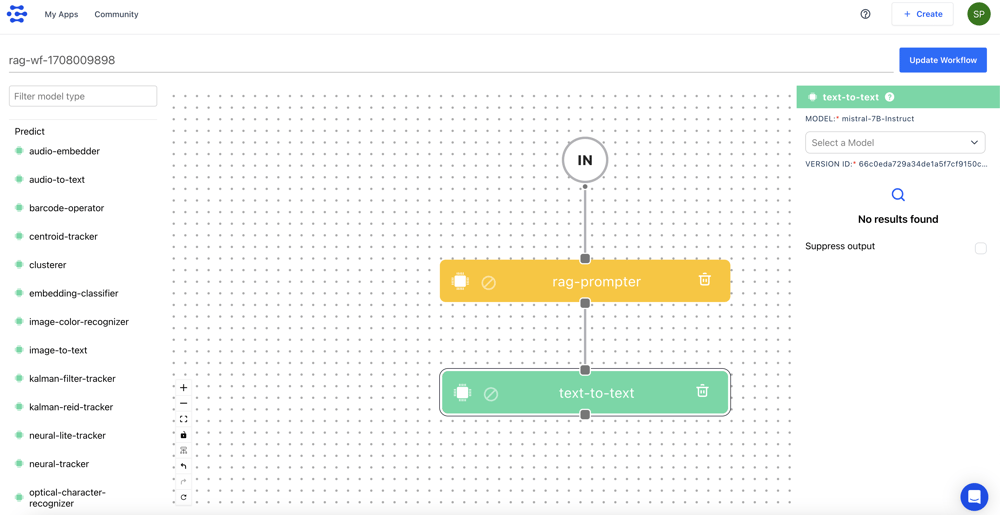

- Subsequent, it would create a RAG prompter workflow. Workflows in Clarifai assist you to mix a number of fashions and operators permitting you to construct highly effective multi-modal programs for varied use instances. Inside the above created app, it would create this workflow. Let’s take a look at the RAG prompter workflow and what it does.

We’ve the enter, RAG prompter mannequin kind, and text-to-text mannequin sorts. Let’s perceive the circulate. At any time when a person sends an enter immediate, the RAG prompter will use that immediate to seek out the related context from the Clarifai vector retailer.

Now, we are going to move the context together with the immediate to the text-to-text mannequin kind to reply it. By default, this workflow makes use of the Mistral-7B-Instruct mannequin. Lastly, the LLM makes use of the context and the person question to reply. In order that’s the RAG prompter workflow.

You needn’t fear about all these items because the setup methodology will deal with these duties for you. All you want to do is specify your app ID.

There are different parameters out there within the setup methodology:

app_url: If you have already got a Clarifai app that incorporates your information, you may move the URL of that app as a substitute of making an app from scratch utilizing the person ID.

llm_url: As we’ve seen, by default the immediate workflow takes the Mistral 7b instruct mannequin, however there are numerous open-source and third-party LLMs within the Clarifai neighborhood. You possibly can move your most well-liked LLM URL.

base_workflow: As talked about, the info shall be embedded in your Clarifai app based mostly on the bottom workflow. By default, it will likely be the textual content workflow, however there are different workflows out there as effectively. You possibly can specify your most well-liked workflow.

Step 3: Add your Paperwork

Subsequent, add your paperwork to embed and retailer them within the Clarifai vector database. You possibly can move a file path to your doc, a folder path to the paperwork, or a public URL to the doc.

On this instance, I’m passing the trail to a PDF file, which is a current survey paper on multimodal LLMs. When you add the doc, it will likely be loaded and parsed into chunks based mostly on the chunk_size and chunk_overlap parameters. By default, the chunk_size is about to 1024, and the chunk_overlap is about to 200. Nevertheless, you may alter these parameters.

As soon as the doc is parsed into chunks, it would ingest the chunks into the Clarifai app.

Step 4: Chat along with your Paperwork

Lastly, chat along with your information utilizing the chat methodology. Right here, I’m asking it to summarize the PDF file and analysis on multimodal massive language fashions.

Conclusion

That’s how straightforward it’s to construct a RAG system with the Python SDK in 4 traces of code. Simply to summarize, to arrange the RAG system, all you want to do is move your person ID, or you probably have your personal Clarifai app, move that app URL. You may also move your most well-liked LLM and workflow.

Subsequent, add the paperwork, and there may be an choice to specify the chunk_size and chunk_overlap parameters to assist parse and chunk the paperwork.

Lastly, chat along with your paperwork. Yow will discover the hyperlink to the Colab Pocket book right here to implement this.

When you’d favor to look at this tutorial you could find the YouTube video right here.

[ad_2]