💡

– the one about surveying VLMs right here, and

– evaluating VLMs by yourself dataset right here

Introduction

For those who’re starting your journey into the world of Imaginative and prescient Language Fashions (VLMs), you’re getting into an thrilling and quickly evolving area that bridges the hole between visible and textual information. On the best way to completely combine VLMs into what you are promoting, there are roughly three phases that you must undergo.

Selecting the Proper Imaginative and prescient Language Mannequin (VLM) for Your Enterprise Wants

Deciding on the fitting VLM on your particular use case is crucial to unlocking its full potential and driving success for what you are promoting. A complete survey of obtainable fashions, may help you navigate the big selection of choices, offering a stable basis to grasp their strengths and purposes.

Figuring out the Greatest VLM for Your Dataset

When you’ve surveyed the panorama, the subsequent problem is figuring out which VLM most closely fits your dataset and particular necessities. Whether or not you’re centered on structured information extraction, data retrieval, or one other job, narrowing down the best mannequin is vital. For those who’re nonetheless on this section, this information on deciding on the fitting VLM for information extraction gives sensible insights that will help you make your best option on your venture.

Advantageous-Tuning Your Imaginative and prescient Language Mannequin

Now that you simply’ve chosen a VLM, the actual work begins: fine-tuning. Advantageous-tuning your mannequin is crucial to attaining the very best efficiency in your dataset. This course of ensures that the VLM is just not solely able to dealing with your particular information but in addition improves its generalization capabilities, in the end resulting in extra correct and dependable outcomes. By customizing the mannequin to your specific wants, you set the stage for achievement in your VLM-powered purposes.

On this article, we are going to dive into the several types of fine-tuning strategies out there for Imaginative and prescient Language Fashions (VLMs), exploring when and methods to apply every strategy based mostly in your particular use case. We’ll stroll by means of organising the code for fine-tuning, offering a step-by-step information to make sure you can seamlessly adapt your mannequin to your information. Alongside the best way, we are going to talk about necessary hyperparameters—equivalent to studying charge, batch measurement, and weight decay—that may considerably impression the end result of your fine-tuning course of. Moreover, we are going to visualize the outcomes of 1 such fine-tuning exercise, evaluating the previous, pre-fine-tuned outcomes from a earlier publish to the brand new, improved outputs after fine-tuning. Lastly, we are going to wrap up with key takeaways and finest practices to bear in mind all through the fine-tuning course of, guaranteeing you obtain the very best efficiency out of your VLM.

Sorts of Advantageous-Tuning

Advantageous-tuning is a vital course of in machine studying, significantly within the context of switch studying, the place pre-trained fashions are tailored to new duties. Two main approaches to fine-tuning are LoRA (Low-Rank Adaptation) and Full Mannequin Advantageous-Tuning. Understanding the strengths and limitations of every may help you make knowledgeable selections tailor-made to your venture’s wants.

LoRA (Low-Rank Adaptation)

LoRA is an progressive technique designed to optimize the fine-tuning course of. Listed here are some key options and advantages:

• Effectivity: LoRA focuses on modifying solely a small variety of parameters in particular layers of the mannequin. This implies you possibly can obtain good efficiency with out the necessity for enormous computational assets, making it ultimate for environments the place assets are restricted.

• Pace: Since LoRA fine-tunes fewer parameters, the coaching course of is usually sooner in comparison with full mannequin fine-tuning. This enables for faster iterations and experiments, particularly helpful in fast prototyping phases.

• Parameter Effectivity: LoRA introduces low-rank updates, which helps in retaining the data from the unique mannequin whereas adapting to new duties. This stability ensures that the mannequin doesn’t neglect beforehand realized data (a phenomenon often known as catastrophic forgetting).

• Use Circumstances: LoRA is especially efficient in eventualities with restricted labeled information or the place the computational price range is constrained, equivalent to in cellular purposes or edge units. It’s additionally useful for big language fashions (LLMs) and imaginative and prescient fashions in specialised domains.

Full Mannequin Advantageous-Tuning

Full mannequin fine-tuning entails adjusting your complete set of parameters of a pre-trained mannequin. Listed here are the primary points to think about:

• Useful resource Depth: This strategy requires considerably extra computational energy and reminiscence, because it modifies all layers of the mannequin. Relying on the dimensions of the mannequin and dataset, this may end up in longer coaching instances and the necessity for high-performance {hardware}.

• Potential for Higher Outcomes: By adjusting all parameters, full mannequin fine-tuning can result in improved efficiency, particularly when you may have a big, various dataset. This technique permits the mannequin to completely adapt to the specifics of your new job, doubtlessly leading to superior accuracy and robustness.

• Flexibility: Full mannequin fine-tuning will be utilized to a broader vary of duties and information varieties. It’s usually the go-to selection when there’s ample labeled information out there for coaching.

• Use Circumstances: Ultimate for eventualities the place accuracy is paramount and the out there computational assets are enough, equivalent to in large-scale enterprise purposes or tutorial analysis with in depth datasets.

Immediate, Prefix and P Tuning

These three strategies are used to determine the very best prompts on your specific job. Mainly, we add learnable immediate embeddings to an enter immediate and replace them based mostly on the specified job loss. These strategies helps one to generate extra optimized prompts for the duty with out coaching the mannequin.

E.g., In prompt-tuning, for sentiment evaluation the immediate will be adjusted from “Classify the sentiment of the next evaluate:” to “[SOFT-PROMPT-1] Classify the sentiment of the next evaluate: [SOFT-PROMPT-2]” the place the soft-prompts are embeddings which have no actual world significance however will give a stronger sign to the LLM that person is on the lookout for sentiment classification, eradicating any ambiguity within the enter. Prefix and P-Tuning are considerably variations on the identical idea the place Prefix tuning interacts extra deeply with the mannequin’s hidden states as a result of it’s processed by the mannequin’s layers and P-Tuning makes use of extra steady representations for tokens

Quantization-Conscious Coaching

That is an orthogonal idea the place both full-finetuning or adapter-finetuning happen in decrease precision, decreasing reminiscence and compute. QLoRA is one such instance.

Combination of Consultants (MoE) Advantageous-tuning

Combination of Consultants (MoE) fine-tuning entails activating a subset of mannequin parameters (or consultants) for every enter, permitting for environment friendly useful resource utilization and improved efficiency on particular duties. In MoE architectures, just a few consultants are educated and activated for a given job, resulting in a light-weight mannequin that may scale whereas sustaining excessive accuracy. This strategy permits the mannequin to adaptively leverage specialised capabilities of various consultants, enhancing its skill to generalize throughout varied duties whereas decreasing computational prices.

Issues for Selecting a Advantageous-Tuning Strategy

When deciding between LoRA and full mannequin fine-tuning, contemplate the next elements:

- Computational Assets: Assess the {hardware} you may have out there. If you’re restricted in reminiscence or processing energy, LoRA would be the more sensible choice.

- Information Availability: When you’ve got a small dataset, LoRA’s effectivity would possibly assist you keep away from overfitting. Conversely, when you have a big, wealthy dataset, full mannequin fine-tuning may exploit that information absolutely.

- Undertaking Objectives: Outline what you purpose to realize. If fast iteration and deployment are essential, LoRA’s velocity and effectivity could also be useful. If attaining the very best attainable efficiency is your main aim, contemplate full mannequin fine-tuning.

- Area Specificity: In specialised domains the place the nuances are vital, full mannequin fine-tuning could present the depth of adjustment essential to seize these subtleties.

- Overfitting: It is very important control validation loss to make sure that the we aren’t over studying on the coaching information

- Catastrophic Forgetting: This refers back to the phenomenon the place a neural community forgets beforehand realized data upon being educated on new information, resulting in a decline in efficiency on earlier duties. That is additionally known as as Bias Amplification or Over Specialization based mostly on context. Though much like overfitting, catastrophic forgetting can happen even when a mannequin performs nicely on the present validation dataset.

To Summarize –

Immediate tuning, LoRA and full mannequin fine-tuning have their distinctive benefits and are suited to totally different eventualities. Understanding the necessities of your venture and the assets at your disposal will information you in deciding on essentially the most acceptable fine-tuning technique. Finally, the selection ought to align along with your targets, whether or not that’s attaining effectivity in coaching or maximizing the mannequin’s efficiency on your particular job.

As per the earlier article, we have now seen that Qwen2 mannequin has given the very best accuracies throughout information extraction. Persevering with the movement, within the subsequent part, we’re going to fine-tune Qwen2 mannequin on CORD dataset utilizing LoRA finetuning.

Setting Up for Advantageous-Tuning

Step 1: Obtain LLama-Manufacturing unit

To streamline the fine-tuning course of, you’ll want LLama-Manufacturing unit. This software is designed for environment friendly coaching of VLMs.

git clone https://github.com/hiyouga/LLaMA-Manufacturing unit/ /residence/paperspace/LLaMA-Manufacturing unit/Cloning the Coaching Repo

Step 2: Create the Dataset

Format your dataset appropriately. A superb instance of dataset formatting comes from ShareGPT, which supplies a ready-to-use template. Make certain your information is in the same format in order that it may be processed appropriately by the VLM.

ShareGPT’s format deviates from huggingface format by having your complete floor fact current inside a single JSON file –

[

{

"messages": [

{

"content": "<QUESTION>n<image>",

"role": "user"

},

{

"content": "<ANSWER>",

"role": "assistant"

}

],

"pictures": [

"/home/paperspace/Data/cord-images//0.jpeg"

]

},

...

...

]ShareGPT format

Creating the dataset on this format is only a query of iterating the huggingface dataset and consolidating all the bottom truths into one json like so –

# https://github.com/NanoNets/hands-on-vision-language-models/blob/fundamental/src/vlm/information/wire.py

cli = Typer()

immediate = """Extract the next information from given picture -

For tables I want a json of listing of

dictionaries of following keys per dict (one dict per line)

'nm', # identify of the merchandise

'value', # whole value of all of the gadgets mixed

'cnt', # amount of the merchandise

'unitprice' # value of a single igem

For sub-total I want a single json of

{'subtotal_price', 'tax_price'}

For whole I want a single json of

{'total_price', 'cashprice', 'changeprice'}

the ultimate output ought to seem like and have to be JSON parsable

{

"menu": [

{"nm": ..., "price": ..., "cnt": ..., "unitprice": ...}

...

],

"subtotal": {"subtotal_price": ..., "tax_price": ...},

"whole": {"total_price": ..., "cashprice": ..., "changeprice": ...}

}

If a area is lacking,

merely omit the important thing from the dictionary. Don't infer.

Return solely these values which can be current within the picture.

this is applicable to highlevel keys as nicely, i.e., menu, subtotal and whole

"""

def load_cord(break up="check"):

ds = load_dataset("naver-clova-ix/cord-v2", break up=break up)

return ds

def make_message(im, merchandise):

content material = json.dumps(load_gt(merchandise).d)

message = {

"messages": [

{"content": f"{prompt}<image>", "role": "user"},

{"content": content, "role": "assistant"},

],

"pictures": [im],

}

return message

@cli.command()

def save_cord_dataset_in_sharegpt_format(save_to: P):

save_to = P(save_to)

wire = load_cord(break up="prepare")

messages = []

makedir(save_to)

image_root = f"{guardian(save_to)}/pictures/"

for ix, merchandise in E(track2(wire)):

im = merchandise["image"]

to = f"{image_root}/{ix}.jpeg"

if not exists(to):

makedir(guardian(to))

im.save(to)

message = make_message(to, merchandise)

messages.append(message)

return write_json(messages, save_to / "information.json")Related Code to Generate Information in ShareGPT format

As soon as the cli command is in place – You’ll be able to create the dataset wherever in your disk.

vlm save-cord-dataset-in-sharegpt-format /residence/paperspace/Information/wire/Step 3: Register the Dataset

Llama-Manufacturing unit must know the place the dataset exists on the disk together with the dataset format and the names of keys within the json. For this we have now to switch the information/dataset_info.json json file within the Llama-Manufacturing unit repo, like so –

from torch_snippets import read_json, write_json

dataset_info = '/residence/paperspace/LLaMA-Manufacturing unit/information/dataset_info.json'

js = read_json(dataset_info)

js['cord'] = {

"file_name": "/residence/paperspace/Information/wire/information.json",

"formatting": "sharegpt",

"columns": {

"messages": "messages",

"pictures": "pictures"

},

"tags": {

"role_tag": "function",

"content_tag": "content material",

"user_tag": "person",

"assistant_tag": "assistant"

}

}

write_json(js, dataset_info)Add particulars concerning the newly created CORD dataset

Step 4: Set Hyperparameters

Hyperparameters are the settings that can govern how your mannequin learns. Typical hyperparameters embrace the educational charge, batch measurement, and variety of epochs. These could require fine-tuning themselves because the mannequin trains.

### mannequin

model_name_or_path: Qwen/Qwen2-VL-2B-Instruct

### technique

stage: sft

do_train: true

finetuning_type: lora

lora_target: all

### dataset

dataset: wire

template: qwen2_vl

cutoff_len: 1024

max_samples: 1000

overwrite_cache: true

preprocessing_num_workers: 16

### output

output_dir: saves/cord-4/qwen2_vl-2b/lora/sft

logging_steps: 10

save_steps: 500

plot_loss: true

overwrite_output_dir: true

### prepare

per_device_train_batch_size: 8

gradient_accumulation_steps: 8

learning_rate: 1.0e-4

num_train_epochs: 10.0

lr_scheduler_type: cosine

warmup_ratio: 0.1

bf16: true

ddp_timeout: 180000000

### eval

val_size: 0.1

per_device_eval_batch_size: 1

eval_strategy: steps

eval_steps: 500Add a brand new file in examples/train_lora/wire.yaml in LLama-Manufacturing unit

We start by specifying the bottom mannequin, Qwen/Qwen2-VL-2B-Instruct. As talked about above, we’re utilizing Qwen2 as our place to begin depicted by the variable model_name_or_path

Our technique entails fine-tuning with LoRA (Low-Rank Adaptation), specializing in all layers of the mannequin. LoRA is an environment friendly finetuning technique, permitting us to coach with fewer assets whereas sustaining mannequin efficiency. This strategy is especially helpful for our structured information extraction job utilizing the CORD dataset.

Cutoff size is used to restrict the transformer’s context size. Datasets the place examples have very massive questions/solutions (or each) want a bigger cutoff size and in flip want a bigger GPU VRAM. Within the case of CORD the max size of query and solutions is just not greater than 1024 so we use it to filter any anomalies that could be current in a single or two examples.

We’re leveraging 16 preprocessing employees to optimize information dealing with. The cache is overwritten every time for consistency throughout runs.

Coaching particulars are additionally optimized for efficiency. A batch measurement of 8 per machine, mixed with gradient accumulation steps set to eight, permits us to successfully simulate a bigger batch measurement of 64 examples per batch. The educational charge of 1e-4 and cosine studying charge scheduler with a warmup ratio of 0.1 assist the mannequin step by step alter throughout coaching.

10 is an efficient place to begin for the variety of epochs since our dataset has solely 800 examples. Normally, we purpose for something between 10,000 to 100,000 whole coaching samples based mostly on the variations within the picture. As per above configuration, we’re going with 10×800 coaching samples. If the dataset is just too massive and we have to prepare solely on a fraction of it, we will both cut back the num_train_epochs to a fraction or cut back the max_samples to a smaller quantity.

Analysis is built-in into the workflow with a ten% validation break up, evaluated each 500 steps to watch progress. This technique ensures that we will monitor efficiency throughout coaching and alter parameters if obligatory.

Step 5: Practice the Adapter Mannequin

As soon as every thing is about up, it’s time to begin the coaching course of. Since we’re specializing in fine-tuning, we’ll be utilizing an adapter mannequin, which integrates with the prevailing VLM and permits for particular layer updates with out retraining your complete mannequin from scratch.

llamafactory-cli prepare examples/train_lora/wire.yamlCoaching for 10 epochs used roughly 20GB of GPU VRAM and ran for about half-hour.

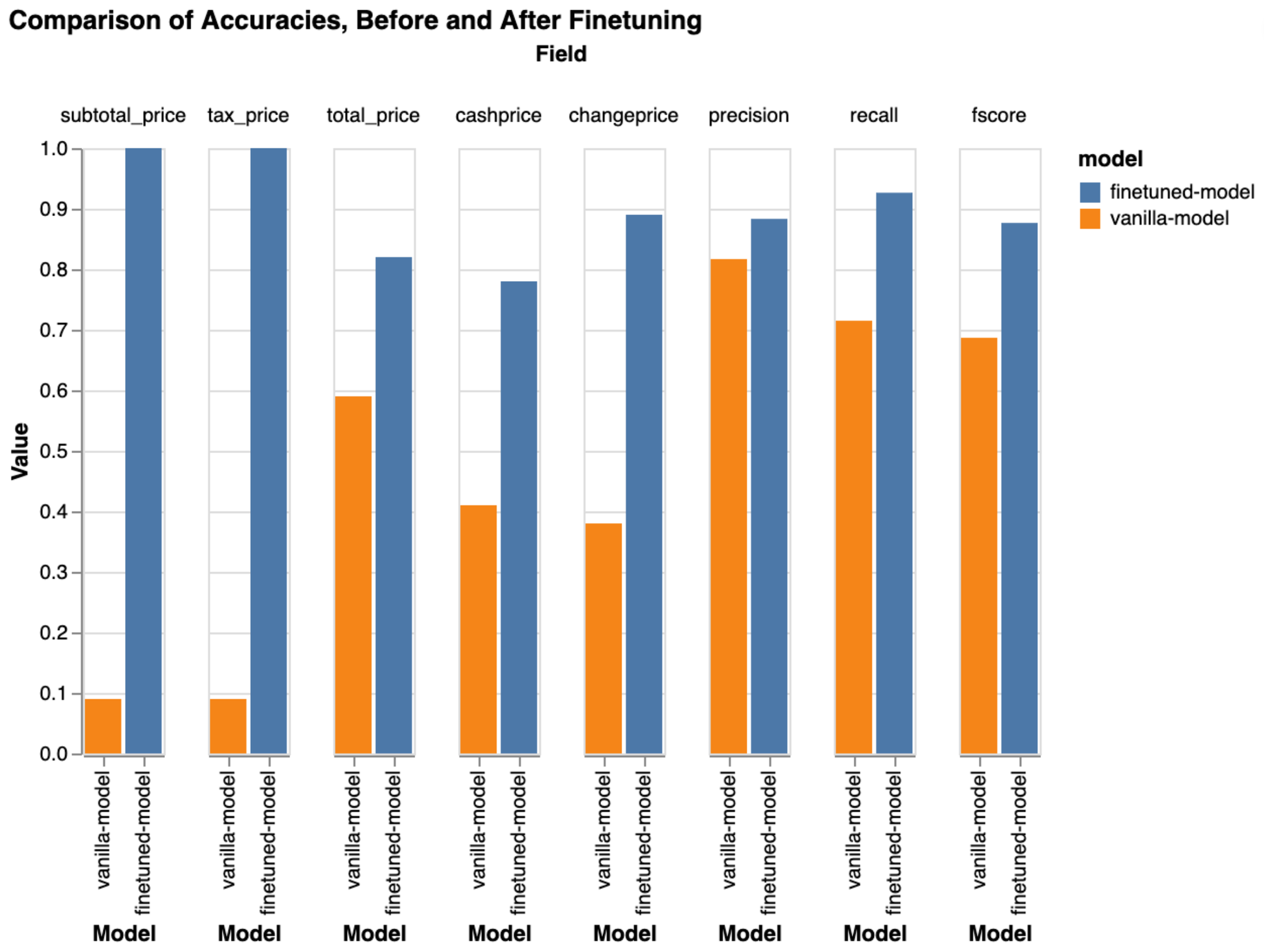

Evaluating the Mannequin

As soon as the mannequin has been fine-tuned, the subsequent step is to run predictions in your dataset to judge how nicely it has tailored. We’ll examine the earlier outcomes below the group vanilla-model and the newest outcomes below the group finetuned-model.

As proven under, there’s a appreciable enchancment in lots of key metrics, demonstrating that the brand new adapter has efficiently adjusted to the dataset.

Issues to Maintain in Thoughts

- A number of Hyperparameter Units: Advantageous-tuning is not a one-size-fits-all course of. You will seemingly have to experiment with totally different hyperparameter configurations to seek out the optimum setup on your dataset.

- Coaching full mannequin or LoRA: If coaching with an adapter exhibits no vital enchancment, it’s logical to modify to full mannequin coaching by unfreezing all parameters. This supplies higher flexibility, rising the probability of studying successfully from the dataset.

- Thorough Testing: You should definitely rigorously check your mannequin at each stage. This contains utilizing validation units and cross-validation strategies to make sure that your mannequin generalizes nicely to new information.

- Filtering Unhealthy Predictions: Errors in your newly fine-tuned mannequin can reveal underlying points within the predictions. Use these errors to filter out unhealthy predictions and refine your mannequin additional.

- Information/Picture augmentations: Make the most of picture augmentations to increase your dataset, enhancing generalization and enhancing fine-tuning efficiency.

Conclusion

Advantageous-tuning a VLM could be a highly effective solution to improve your mannequin’s efficiency on particular datasets. By fastidiously deciding on your tuning technique, setting the fitting hyperparameters, and totally testing your mannequin, you possibly can considerably enhance its accuracy and reliability.