[ad_1]

Up to date February 9, 2024 to incorporate the most recent iteration of Tower fashions.

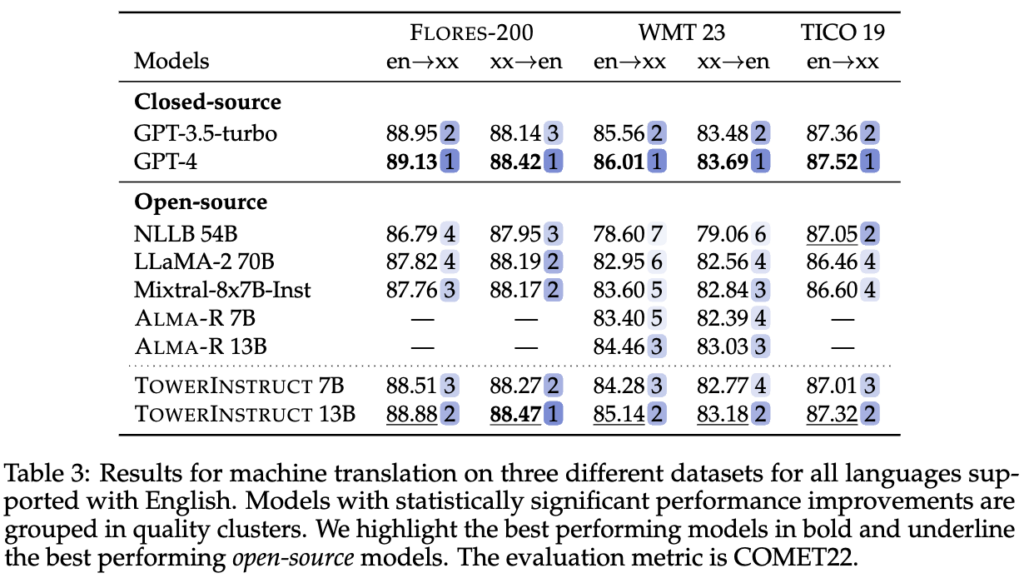

We’re thrilled to announce the discharge of Tower, a collection of multilingual giant language fashions (LLM) optimized for translation-related duties. Tower is constructed on prime of LLaMA2 [1], is available in two sizes — 7B and 13B parameters —, and presently helps 10 languages: English, German, French, Spanish, Chinese language, Portuguese, Italian, Russian, Korean, and Dutch. It’s presently the strongest open-weight mannequin for translation — surpassing devoted translation fashions and LLMs of a lot larger scale, similar to NLLB-54B, ALMA-R, and LLaMA-2 70B — and it goes so far as to be aggressive with closed fashions like GPT-3.5 and GPT-4. Tower additionally masters a variety of different translation-related duties, starting from pre-translation duties, similar to grammatical error correction, to translation and analysis duties, similar to machine translation (MT), computerized post-editing (APE), and translation rating. When you’re engaged on multilingual NLP and associated issues, go forward and take a look at Tower.

The coaching and launch of the Tower mannequin is a joint effort of Unbabel, the SARDINE Lab at Instituto Superior Técnico, and the MICS lab at CentraleSupélec on the College of Paris-Saclay. The objective of this launch is to advertise collaborative and reproducible analysis to facilitate information sharing and to drive additional developments to multilingual LLMs and associated analysis. As such, we’re comfortable to:

- Launch the weights of our Tower fashions: TowerBase and TowerInstruct.

- Launch the info that we used to fine-tune these fashions: TowerBlocks.

- Launch the analysis knowledge and code: TowerEval, an LLM analysis repository for MT-related duties.

From LLaMA2 to Tower: how we reworked an English-centric LLM right into a multilingual one

Giant language fashions took the world by storm final yr. From GPT-3.5 to LLaMA and Mixtral, closed and open-source LLMs have demonstrated more and more sturdy capabilities for fixing pure language duties. Machine translation is not any exception: GPT-4 was amongst final yr’s greatest translation methods for a number of language instructions within the WMT2023’s Normal Translation observe, probably the most established benchmark within the discipline.

Sadly, the story will not be the identical with present open-source fashions; these are predominantly constructed with English knowledge and little to no multilingual knowledge and are but to make a major dent in translation and associated duties, like computerized post-edition, computerized translation analysis, amongst others. We wanted to bridge this hole, so we got down to construct a state-of-the-art multilingual mannequin on prime of LLaMA2.

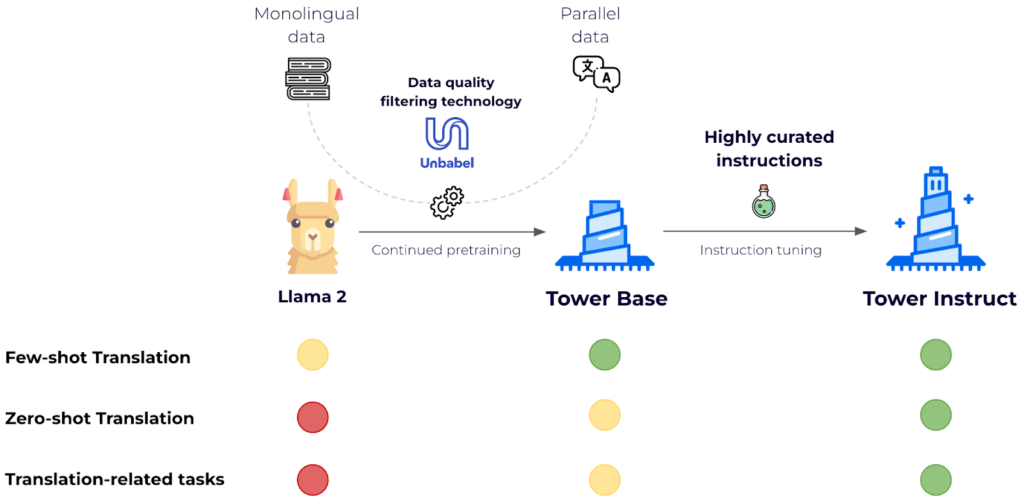

This required two steps: continued pre-training and instruction tuning. The previous is crucial to enhance LLaMA2’s help to different languages, and the latter takes the mannequin to the subsequent stage by way of fixing particular duties in a 0-shot trend.

For continued pretraining, we leveraged 20 billion tokens of textual content evenly cut up amongst languages. Two-thirds of the tokens come from monolingual knowledge sources — a filtered model of the mc4 [3] dataset — and one-third are parallel sentences from varied public sources similar to OPUS [5]. Crucially, we leverage Unbabel expertise, COMETKiwi [2], to filter for high-quality parallel knowledge. The result is a considerably improved model of LLaMA2 for the goal languages that maintains its capabilities in English: TowerBase. The languages supported by the present model are English, German, French, Chinese language, Spanish, Portuguese, Italian, Dutch, Korean, and Russian.

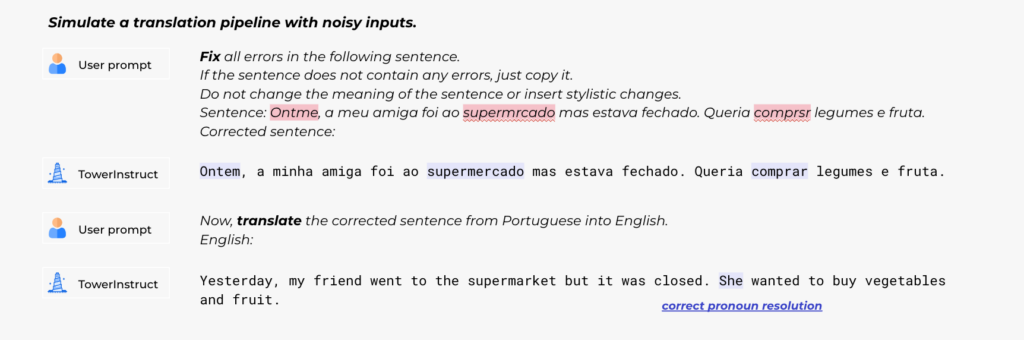

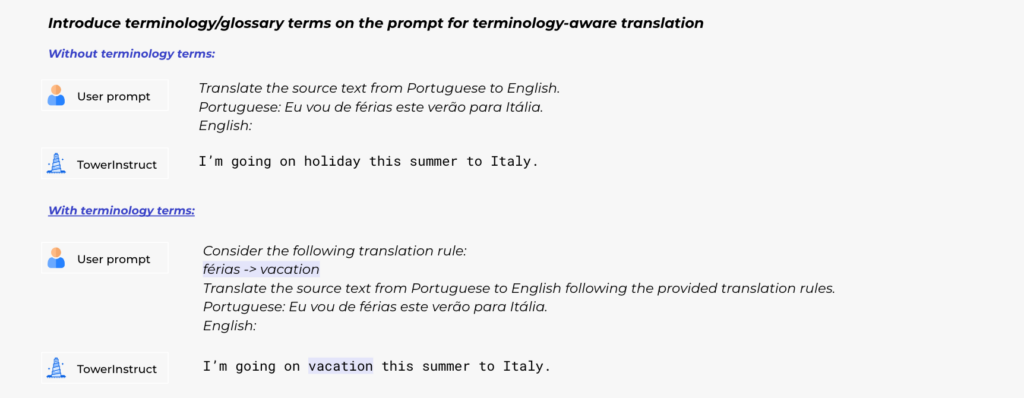

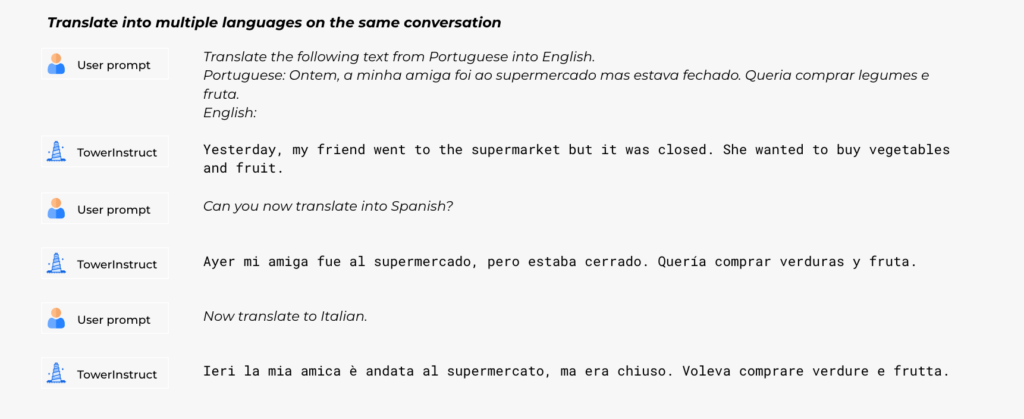

For supervised fine-tuning, we fastidiously constructed a dataset with various, high-quality task-specific data, in addition to conversational knowledge and code directions. We manually constructed a whole lot of various prompts throughout all duties, together with zero and few-shot templates. Our dataset, TowerBlocks, consists of knowledge for a number of translation-related duties, similar to computerized put up version, machine translation and its completely different variants (e.g., context-aware translation, terminology-aware translation, multi-reference translation), named-entity recognition, error span prediction, paraphrase era, and others. The info data had been fastidiously filtered utilizing completely different heuristics and high quality filters, similar to COMETKiwi, to make sure using high-quality knowledge at fine-tuning time. Greater than another issue, this filtering, mixed with cautious alternative of hyperparameters, performed a vital position in acquiring important enhancements over the continued pre-trained mannequin. The ensuing mannequin, TowerInstruct, handles a number of duties seamlessly in a 0-shot trend — bettering effectivity at inference time — and might remedy different held-out duties with applicable immediate engineering. Specifically, for machine translation, TowerInstruct showcases wonderful efficiency, outperforming fashions of bigger scale and devoted translation fashions, similar to Mixtral-8x7B-Instruct [7], LLaMA-2 70B [1], ALMA-R [6] and NLLB 54B [8]. In reality, TowerInstruct is the present greatest open-weight mannequin for machine translation. Furthermore, it’s a very sturdy competitor of closed fashions like GPT-3.5 and GPT-4: TowerInstruct could be very a lot on par with GPT-3.5 and might compete in some language pairs with GPT-4. And this isn’t all: for computerized post-edition, named-entity recognition and supply error correction, TowerInstruct outperforms GPT3.5 and Mixtral 8x7B throughout the board, and might go so far as outperforming GPT4.

Utilizing the Tower fashions

We’re releasing each pre-trained and instruction-tuned mannequin weights, in addition to the instruction tuning and analysis knowledge. We’re additionally releasing TowerEval, an analysis repository targeted on MT and associated duties that may enable customers to breed our benchmarks and consider their very own LLMs. We invite you to go to our Huggingface web page and GitHub repository and begin utilizing them!

These Tower fashions are solely the start: internally, we’re engaged on leveraging Unbabel expertise and knowledge to enhance our translation platform. Transferring ahead, we plan to make much more thrilling releases, so keep tuned!

Acknowledgments

A part of this work was supported by the EU’s Horizon Europe Analysis and Innovation Actions (UTTER, contract 101070631), by the challenge DECOLLAGE (ERC-2022-CoG 101088763), and by the Portuguese Restoration and Resilience Plan by way of challenge C645008882- 00000055 (Heart for Accountable AI). We thank GENCI-IDRIS for the technical help and HPC sources used to partially help this work.

References

[1] Llama 2: Open Basis and Fantastic-Tuned Chat Fashions. Technical report

[2] Scaling up CometKiwi: Unbabel-IST 2023 Submission for the High quality Estimation Shared Activity. WMT23

[4] Parallel Information, Instruments and Interfaces in OPUS. LREC2012

[5] A Paradigm Shift in Machine Translation: Boosting Translation Efficiency of Giant Language Fashions

[6] Contrastive Choice Optimization: Pushing the Boundaries of LLM Efficiency in Machine Translation

[8] No Language Left Behind: Scaling Human-Centered Machine Translation

[ad_2]