[ad_1]

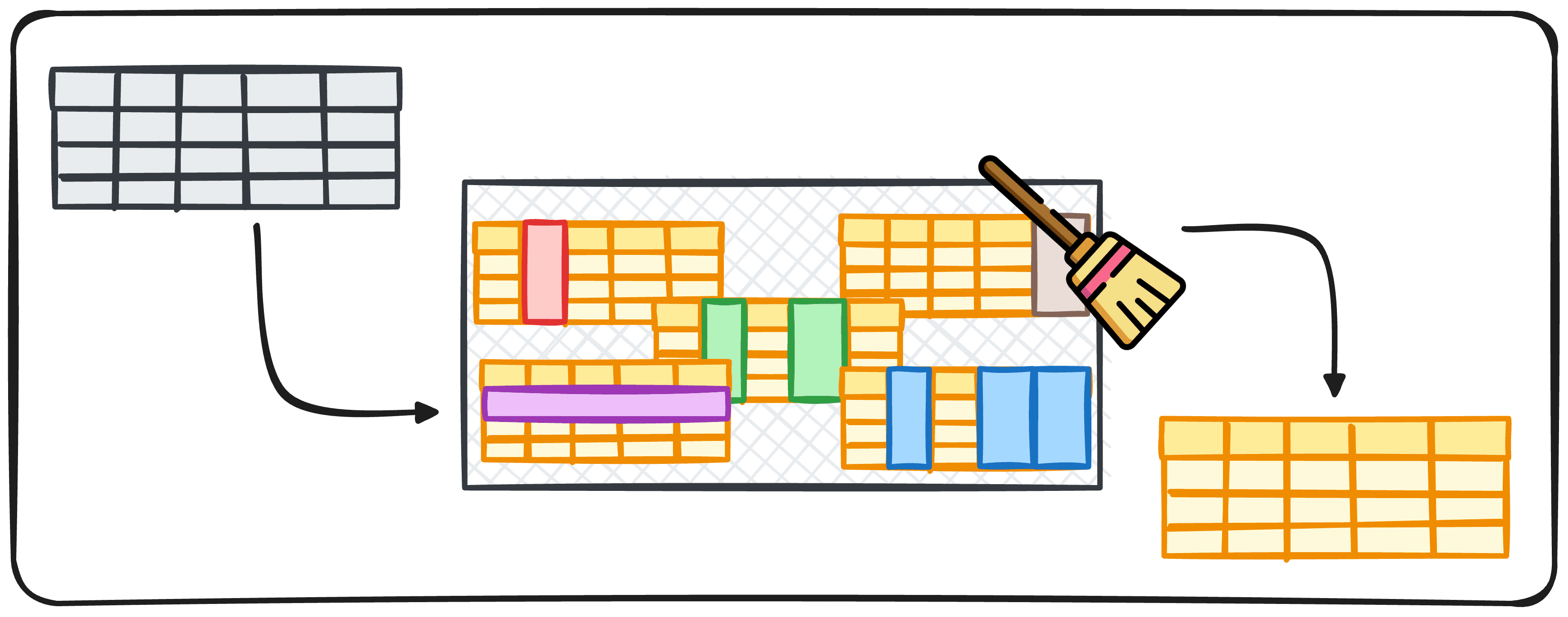

Picture by Creator

It’s a extensively unfold reality amongst Knowledge Scientists that knowledge cleansing makes up an enormous proportion of our working time. Nonetheless, it is likely one of the least thrilling elements as properly. So this results in a really pure query:

Is there a solution to automate this course of?

Automating any course of is all the time simpler mentioned than achieved for the reason that steps to carry out rely totally on the particular undertaking and purpose. However there are all the time methods to automate, at the least, among the elements.

This text goals to generate a pipeline with some steps to verify our knowledge is clear and prepared for use.

Knowledge Cleansing Course of

Earlier than continuing to generate the pipeline, we have to perceive what elements of the processes may be automated.

Since we need to construct a course of that can be utilized for nearly any knowledge science undertaking, we have to first decide what steps are carried out time and again.

So when working with a brand new knowledge set, we normally ask the next questions:

- What format does the info are available?

- Does the info include duplicates?

- Does the knowledge include lacking values?

- What knowledge varieties does the info include?

- Does the info include outliers?

These 5 questions can simply be transformed into 5 blocks of code to cope with every of the questions:

1.Knowledge Format

Knowledge can come in numerous codecs, comparable to JSON, CSV, and even XML. Each format requires its personal knowledge parser. As an example, pandas present read_csv for CSV information, and read_json for JSON information.

By figuring out the format, you’ll be able to select the fitting instrument to start the cleansing course of.

We are able to simply determine the format of the file we’re coping with utilizing the trail.plaintext operate from the os library. Due to this fact, we are able to create a operate that first determines what extension we now have, after which applies on to the corresponding parser.

2. Duplicates

It occurs very often that some rows of the info include the identical precise values as different rows, what we all know as duplicates. Duplicated knowledge can skew outcomes and result in inaccurate analyses, which isn’t good in any respect.

That is why we all the time want to verify there aren’t any duplicates.

Pandas received us coated with the drop_duplicated() technique, which erases all duplicated rows of a dataframe.

We are able to create an easy operate that makes use of this technique to take away all duplicates. If needed, we add a columns enter variable that adapts the operate to remove duplicates primarily based on a selected record of column names.

3. Lacking Values

Lacking knowledge is a typical challenge when working with knowledge as properly. Relying on the character of your knowledge, we are able to merely delete the observations containing lacking values, or we are able to fill these gaps utilizing strategies like ahead fill, backward fill, or substituting with the imply or median of the column.

Pandas gives us the .fillna() and .dropna() strategies to deal with these lacking values successfully.

The selection of how we deal with lacking values is dependent upon:

- The kind of values which might be lacking

- The proportion of lacking values relative to the variety of whole data we now have.

Coping with lacking values is a fairly complicated job to carry out – and normally one of the vital essential ones! – you’ll be able to be taught extra about it within the following article.

For our pipeline, we’ll first test the full variety of rows that current null values. If solely 5% of them or much less are affected, we’ll erase these data. In case extra rows current lacking values, we’ll test column by column and can proceed with both:

- Imputing the median of the worth.

- Generate a warning to additional examine.

On this case, we’re assessing the lacking values with a hybrid human validation course of. As you already know, assessing lacking values is an important job that may not be missed.

When working with common knowledge varieties we are able to proceed to rework the columns instantly with the pandas .astype() operate, so you could possibly truly modify the code to generate common conversations.

In any other case, it’s normally too dangerous to imagine {that a} transformation will probably be carried out easily when working with new knowledge.

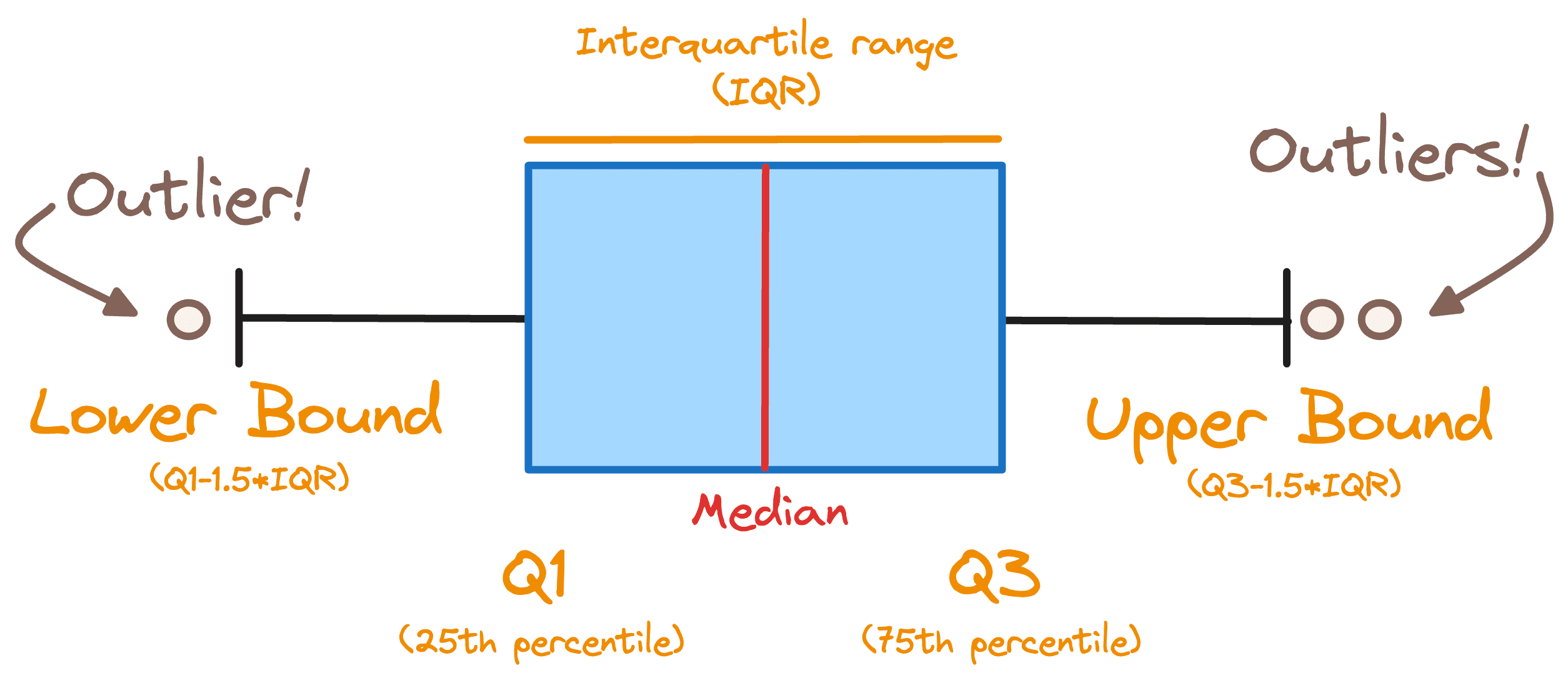

5. Coping with Outliers

Outliers can considerably have an effect on the outcomes of your knowledge evaluation. Strategies to deal with outliers embody setting thresholds, capping values, or utilizing statistical strategies like Z-score.

With a purpose to decide if we now have outliers in our dataset, we use a typical rule and think about any file outdoors of the next vary as an outlier. [Q1 — 1.5 * IQR , Q3 + 1.5 * IQR]

The place IQR stands for the interquartile vary and Q1 and Q3 are the first and the third quartiles. Under you’ll be able to observe all of the earlier ideas displayed in a boxplot.

Picture by Creator

Remaining Ideas

Knowledge Cleansing is an important a part of any knowledge undertaking, nonetheless, it’s normally essentially the most boring and time-wasting section as properly. That is why this text successfully distills a complete strategy right into a sensible 5-step pipeline for automating knowledge cleansing utilizing Python and.

The pipeline isn’t just about implementing code. It integrates considerate decision-making standards that information the person by means of dealing with totally different knowledge eventualities.

This mix of automation with human oversight ensures each effectivity and accuracy, making it a sturdy resolution for knowledge scientists aiming to optimize their workflow.

You possibly can go test my complete code within the following GitHub repo.

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is presently working within the knowledge science area utilized to human mobility. He’s a part-time content material creator targeted on knowledge science and expertise. Josep writes on all issues AI, masking the appliance of the continuing explosion within the area.

[ad_2]